Internet Scuffing merely is the process of gathering info from the Internet. Through Web Scraping Devices one can download and install structured data from the web to be used for analysis in an automatic fashion. WebHarvy is an intriguing business that showed up as a Learn here highly utilized scraping tool.

They provide extremely premium proxies for your requirements & is top-line internet scratching software application. The device you have should have the ability to complete the scuffing job swiftly sufficient so as not to miss out on essential information that might turn up. Permitting a substantial hold-up in data collection can possibly trigger you to miss chances that you may have otherwise had the ability to manipulate.

Simple Web Essence

Well, I should state it is dead simple to use and exports JSON or Excel sheets of the data you have an interest in by simply clicking on it. It offers a complimentary pack where you can scuff 200 pages in simply 40 mins. A really very large pool of datacenter, mobile as well as residential proxies. Ideal for individuals with little to advanced knowledge of programs. Web scratching itself is not unlawful, yet individuals require to be mindful with exactly how to utilize this technique even though there are still a great deal of grey areas around law enforcement of web scraping. For individuals who want to reduce the chance of legal conflicts in internet scratching, it is important to recognize the lawful dangers around internet scraping.

- Obtain our services to establish any type of kind of website for your https://postheaven.net/solenaaqoy/and-itand-39-s-a-huge-distinction-due-to-the-fact-that-with-scraping-you-usually businesses or organizations.

- You can even customize the internet crawlers to make them ideal for your scrapers.

- Apify has actually stood out as a solution partially because of its world-class specialists that stay with you all throughout.

- The option for data retention of historical data was not available for a few customers.

- Develop an information swimming pool with the most exact and exact data to create dependable insights.

It's a distinct information collection platform that you can tailor for your demands. It uses a host of services such as web scraping, API integrations as well as ETL procedures. However, it's not just mechanical data removal that you obtain; ScrapeHero has implemented AI-based high quality checks to analyze data top quality concerns and fix them. Without compromising high quality, ScrapeHero deals with intricate JavaScript/AJAX websites, CAPTCHA, IP blacklisting transparently. For its robust enterprise-grade information extraction as well as exceptional consumer assistance, Sequentum ranks with the most effective in the web scratching market. While different organizations have various demands, no need to stress if you have highly certain demands.

Anticipating Evaluation:

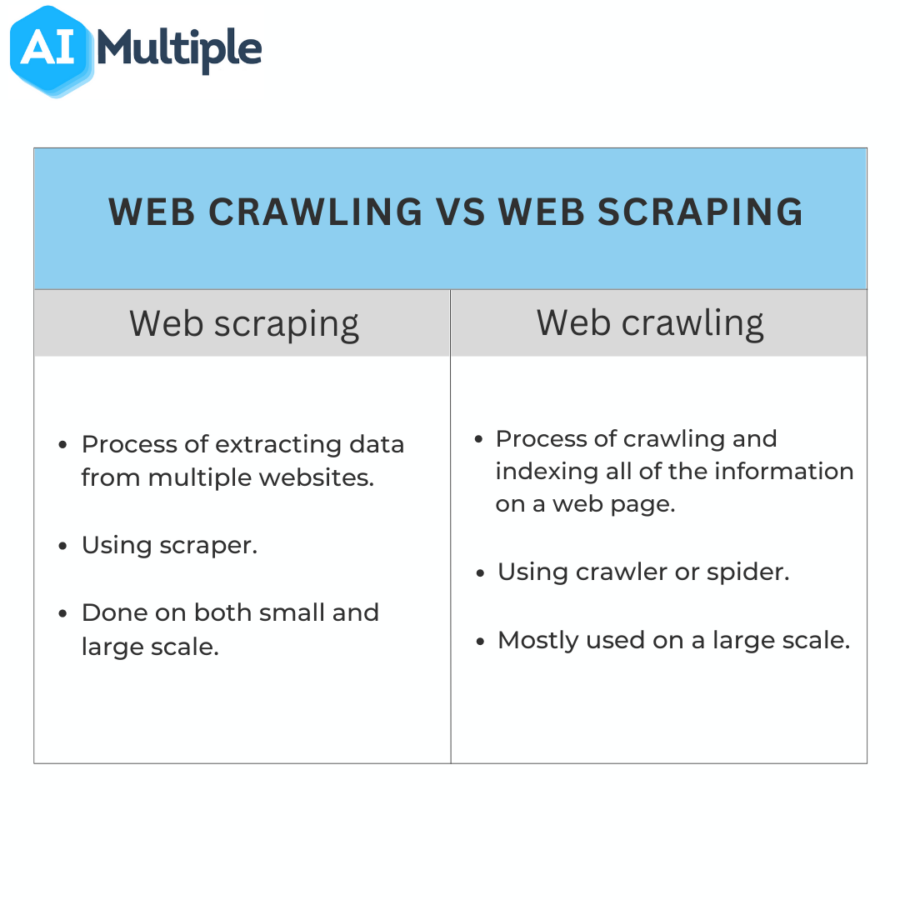

Despite just how very easy a code is to write, an expert coder is needed to finish the process each time you intend to scrape a site. Luckily, you can have a pre-built AI-powered tool that can safely handle code-free data extraction. Scrapinghub asserts that they transform internet sites right into useful information with industry-leading modern technology. Their services are "Data as needed" for big and little scraping projects with exact as well as dependable data feeds at really quick prices. They supply lead information removal and also have a group of web scuffing designers. Scraping tools and services Internet scraping is a significantly extensive method that enables customers to remove data from sites-- often multiple internet sites at once-- and convert it into a readable style for analysis.

Daily Deal: The Agile Expert Project Management Bundle - Techdirt

Daily Deal: The Agile Expert Project Management Bundle.

Posted: Fri, 04 Aug 2023 17:40:00 GMT [source]

Next off, click on the Save Table activity complying with the Scrape structured data task. It is already set up in a manner that the resulting Excel file will certainly be placed in the very same folder where the robot is located. Last but not least, prior to developing a ". neek" data (A ". neek" data is a data in ElectroNeek's Studio; it can be run just in Workshop by the robot runner), you require to check your data structure. Low upkeep is required as automated internet scrapes call for practically no maintenance in the future. Backing up your GitHub repositories ensures that you can conveniently access and also obtain your data if you shed data on your GitHub account. Apify got a great deal of modules called star to do information handling, turn website to API, data makeover, crawl websites, run headless chrome, etc.